Read time: 10 minutes

Picture this: your .NET API is cruising along in production, handling a few hundred requests per second without breaking a sweat.

Then Black Friday hits, or your app goes viral, and suddenly you’re watching your single container instance buckle under the load.

Response times spike to 20+ seconds. Users get timeout errors.

Sound familiar?

This is exactly why horizontal scaling exists. Instead of throwing bigger hardware at the problem, you spin up multiple identical instances to share the load.

It’s the difference between hiring one superhuman cashier versus opening 10 normal checkout lanes.

But here’s the thing: most developers have never actually seen horizontal scaling work in practice.

Today, I’m going to fix that. We’ll build a simple .NET API endpoint, deploy it to Azure Container Apps, and then absolutely hammer it with heavy load.

The results will show you exactly why horizontal scaling is essential for any production application.

Let’s dive in.

A simple endpoint to stress

To see the benefits of horizontal scaling, we will need at least some endpoint in our .NET backend API that we can hit aggressively.

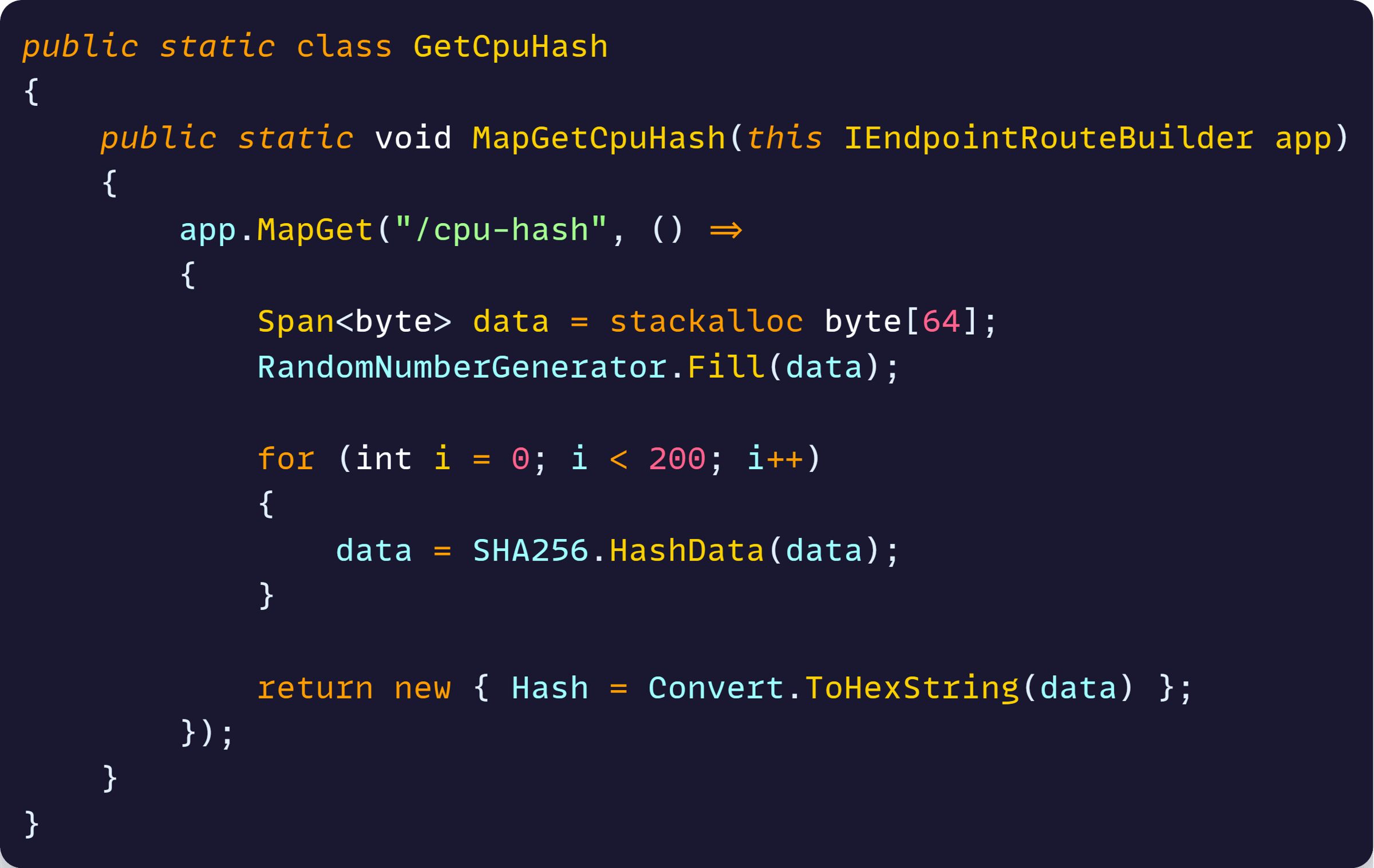

Let’s use something like this:

This endpoint performs intensive cryptographic work on every request:

- Generates 64 bytes of random data

- Runs SHA256 hashing 200 times in succession

- Returns the final hash as a hex string

Each request burns significant CPU cycles, making it perfect for testing how different numbers of container instances behave under load.

Now, let me clarify something about this test.

Why no database calls or external dependencies?

While real applications often involve database calls, including them in our scaling test would introduce too many variables.

Database connection pools, query optimization, and external service limits can become bottlenecks that mask the benefits of horizontal container scaling.

By using CPU-intensive work instead, we can cleanly demonstrate how additional container instances improve performance when the bottleneck is actually within our application code, not external dependencies.

Next, let’s prepare our infrastructure.

Configuring scaling rules

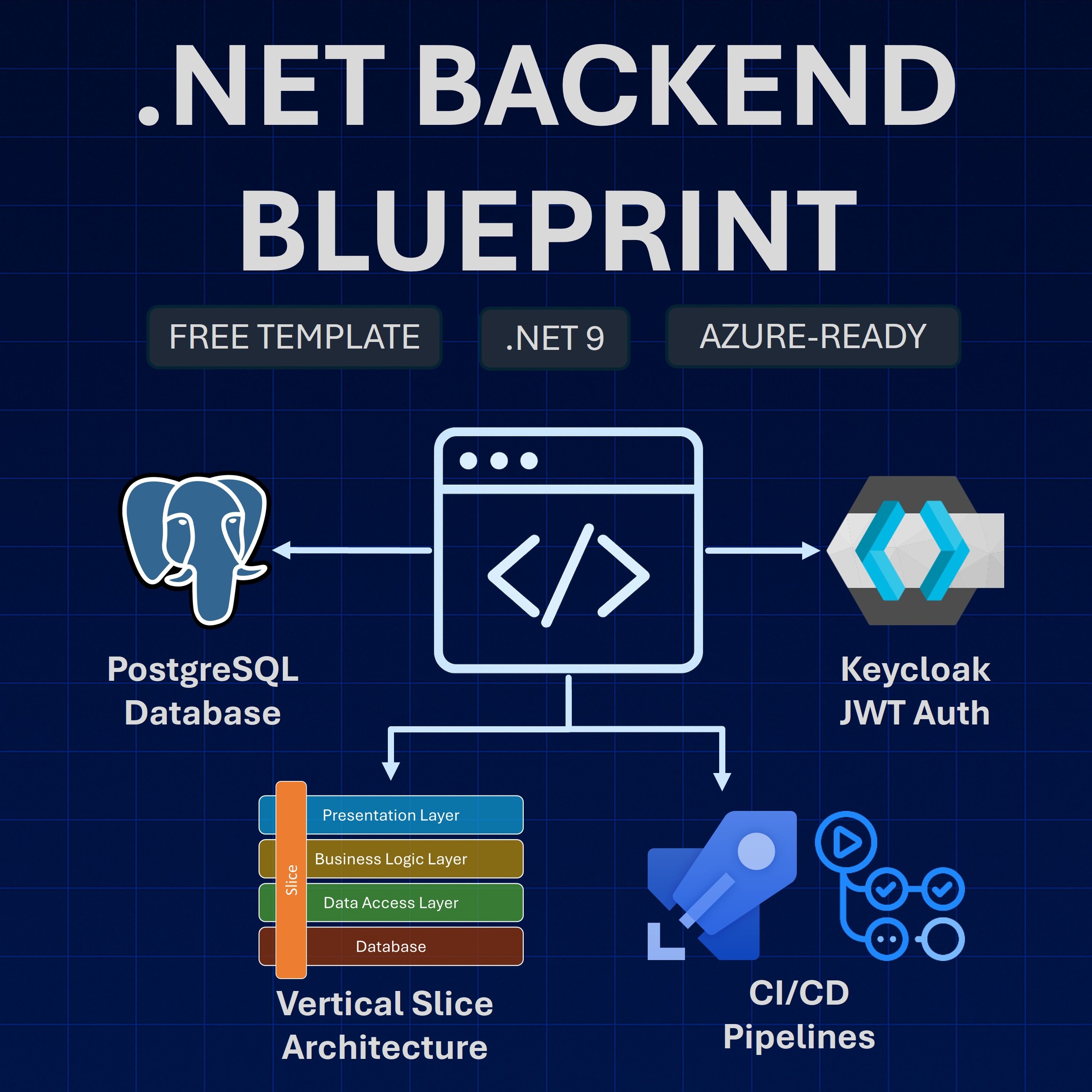

Last week, I showed how to easily customize your Azure Container Apps infrastructure via the built-in extensibility points available in .NET Aspire’s Azure hosting APIs.

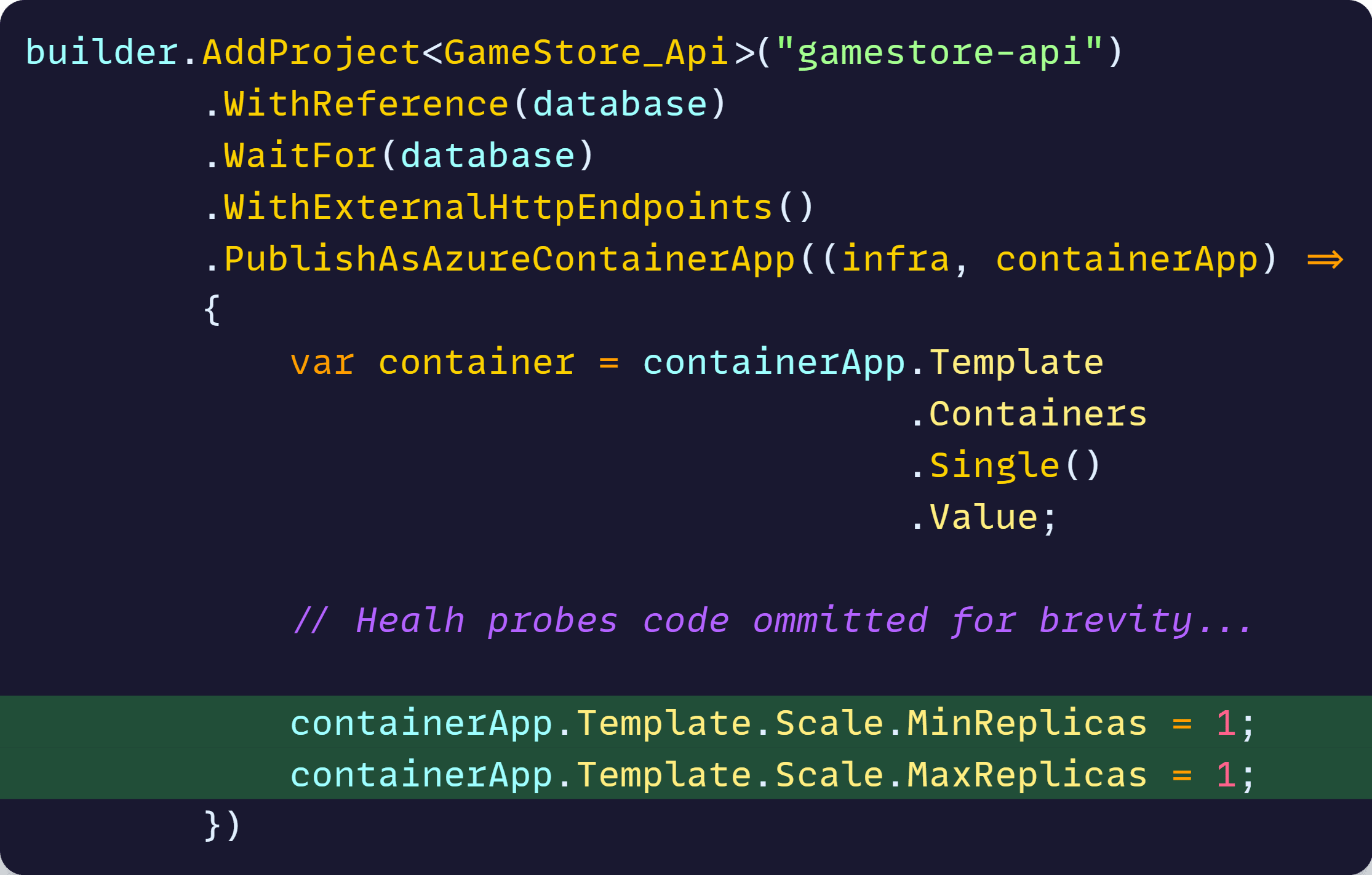

Well, we can use the same approach to define the scaling rules for our .NET API, like this:

This will configure our container app so that it ensures there’s always one and only one replica (a container instance) serving requests, no matter how much load the API receives.

This is not what you want to do in most cases, but let’s use it as our baseline to see the initial performance of the API under heavy load.

We can now quickly deploy our API and the related infrastructure with a quick azd up call. If you are new to this, I covered it in more detail in my .NET Aspire Tutorial.

Now let’s run our test.

Stress testing with 1 replica

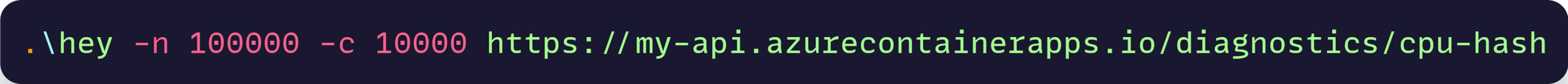

For this test, I’ll use hey, a small but powerful open-source command-line tool designed to send some load to any web application.

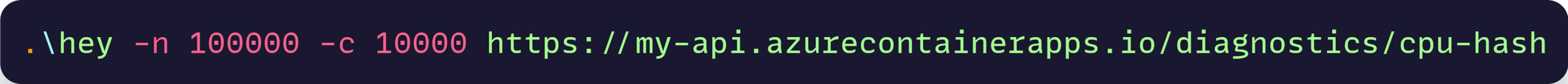

Now, after my app is fully provisioned in Container Apps, here’s the hey command I ran:

That command does the following:

- Sends 100,000 total requests to our new API endpoint

- Uses 10,000 concurrent connections (users hitting the API simultaneously)

In simple terms, this simulates 10,000 users all hammering our API at the exact same time, with each “user” making multiple requests until we’ve sent 100,000 total requests.

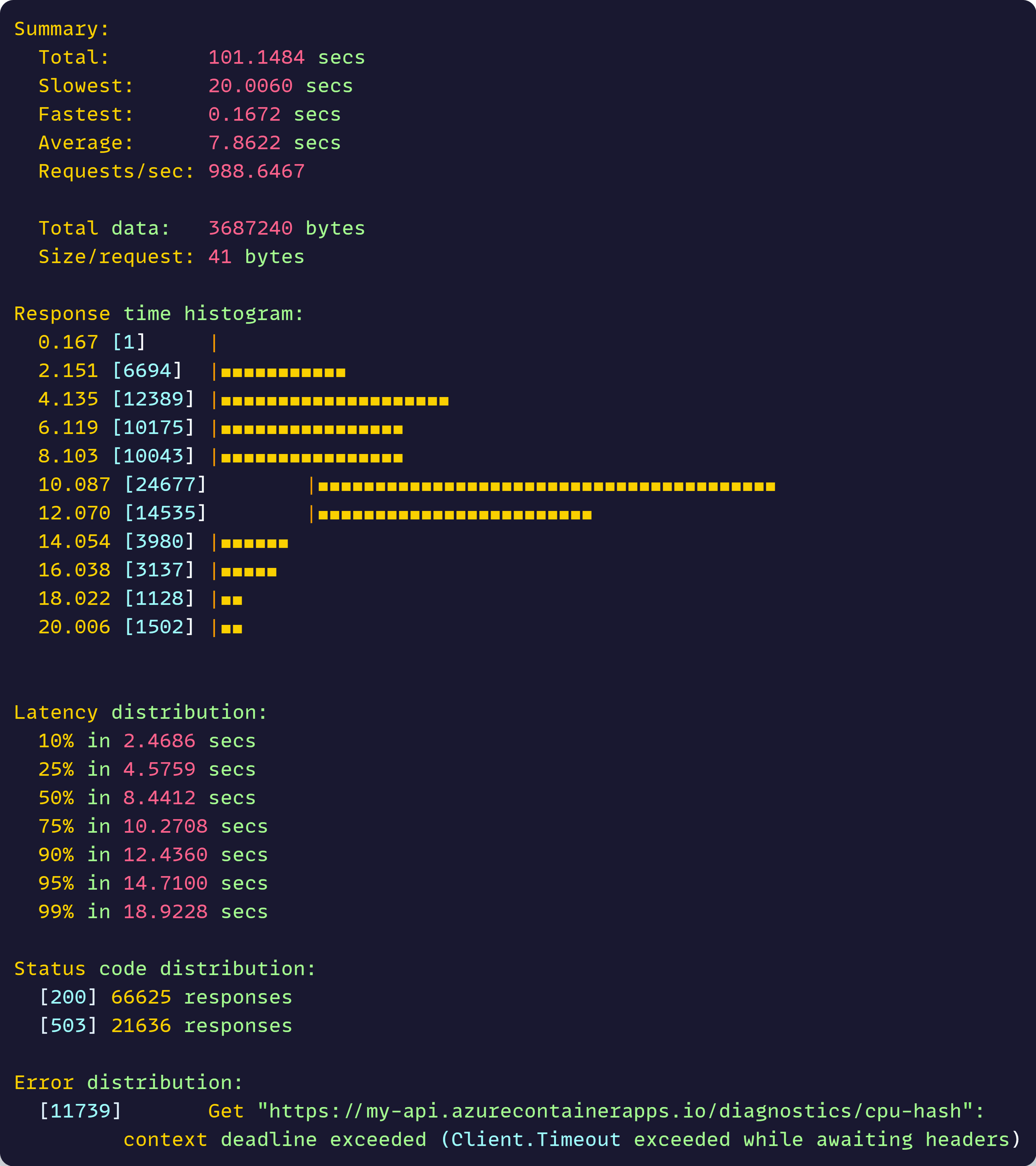

The test took about 100 secs and came back with this (simplified for brevity):

What those numbers mean is that the system is completely overwhelmed:

- 33% failure rate: Only 66,625 requests succeeded out of 100,000 sent

- 21,636 got “503 Service Unavailable” errors - the server rejecting requests it can’t handle

- 11,739 requests timed out completely - never got a response at all

- Terrible response times: Average of 7.8 seconds, with some taking up to 20 seconds

- Low throughput: Only 988 requests per second when we’re hammering it with 10,000 concurrent connections

The key insight: With 10,000 concurrent users hitting our CPU-intensive endpoint, 1 replica simply can’t keep up. It’s rejecting or timing out on 1 in 3 requests.

Now, let’s take advantage of containers and their natural horizontal scaling capabilities.

Updating the scaling rules

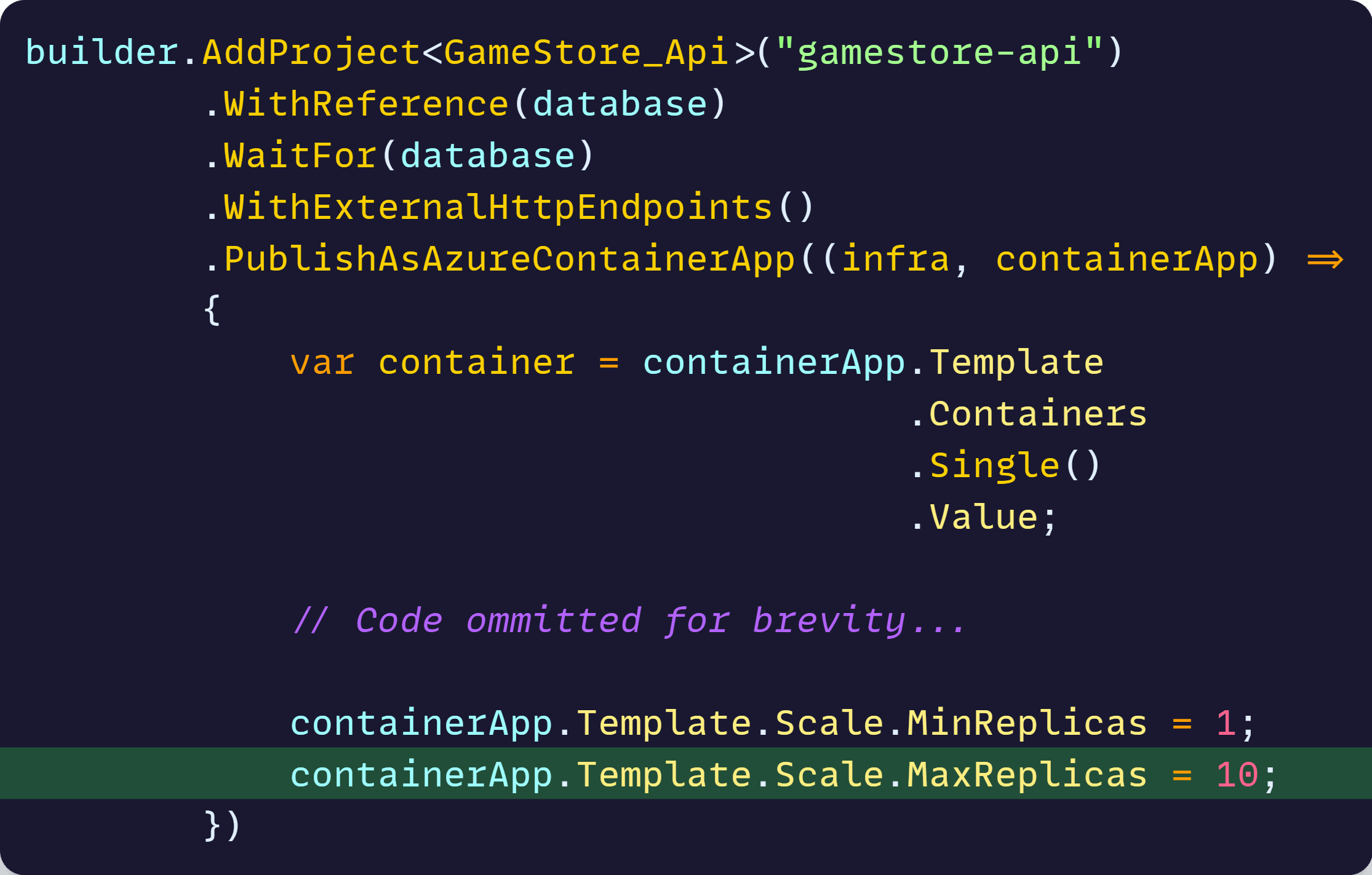

The beauty of containers is that running 10 of them is not any harder than running 1, and since we are using .NET Aspire, all we need to change is one line in our AppHost Program.cs:

This will tell Container Apps that now it can use up to 10 replicas of our containerized .NET API to handle the incoming load.

How will it know when it is time to scale? For this, it uses an HTTP scaling rule which, by default, will spin up a new replica if any existing replica receives more than 10 concurrent requests.

To deploy this scaling update, we can again do azd up or, to make it quicker, we can also use the azd deploy command, which containerizes and deploys your API, but won’t redeploy any infra.

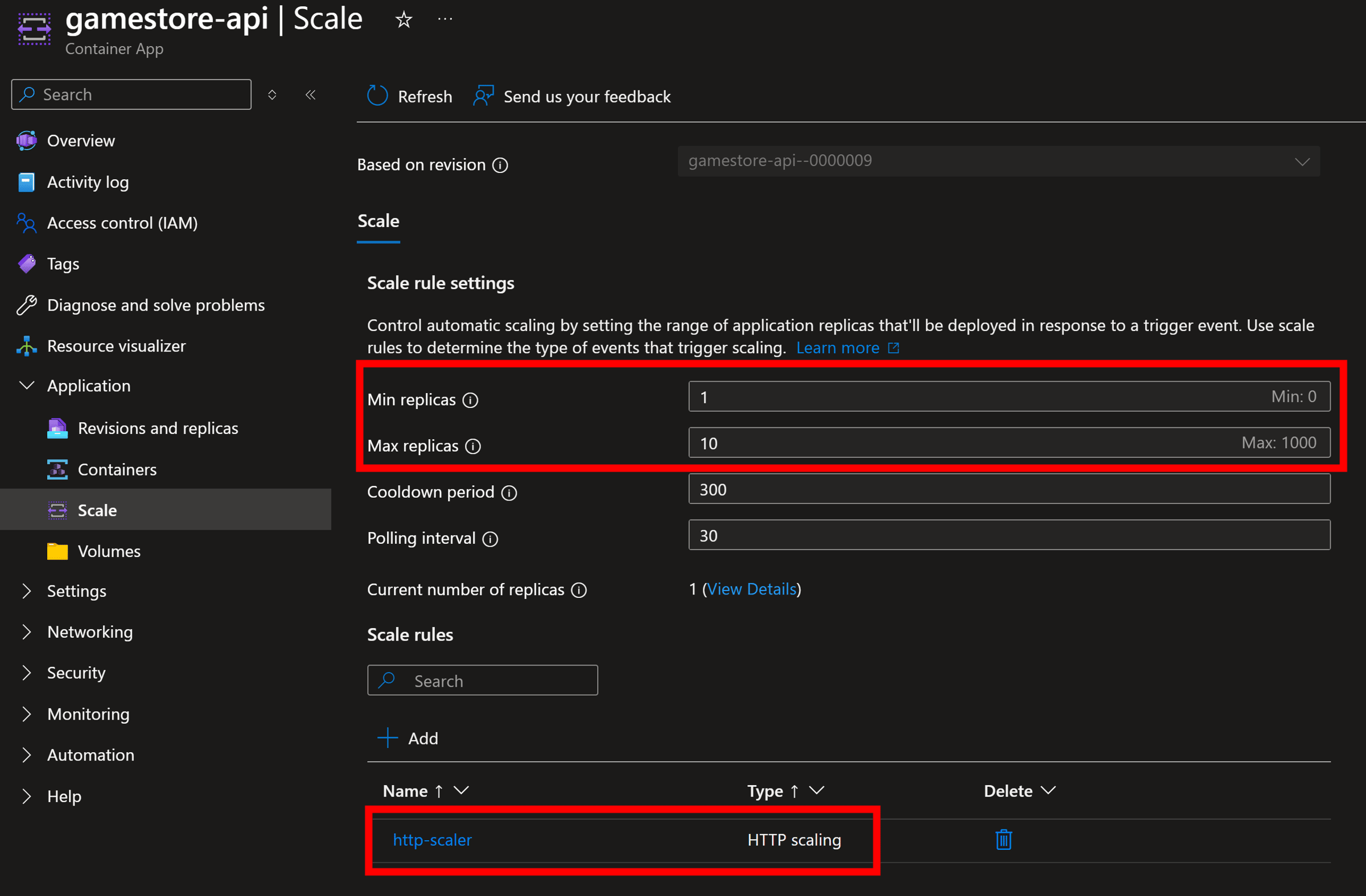

After deployment, our scale rule settings will look like this in the Azure Portal:

Now, let’s run that test again.

Stress testing with 10 replicas (max)

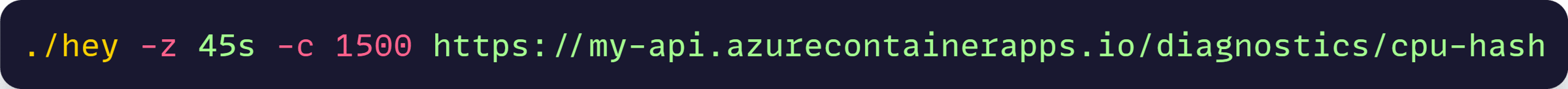

Given our scaling rules, we may want to warm up things first, so that most of those 10 replicas come alive before the real test.

For this, we can run this initial hey command:

This is similar to our previous command, but with 2 differences:

- It runs the test for exactly 45 seconds (duration-based instead of request-count based)

- It uses only 1,500 concurrent connections

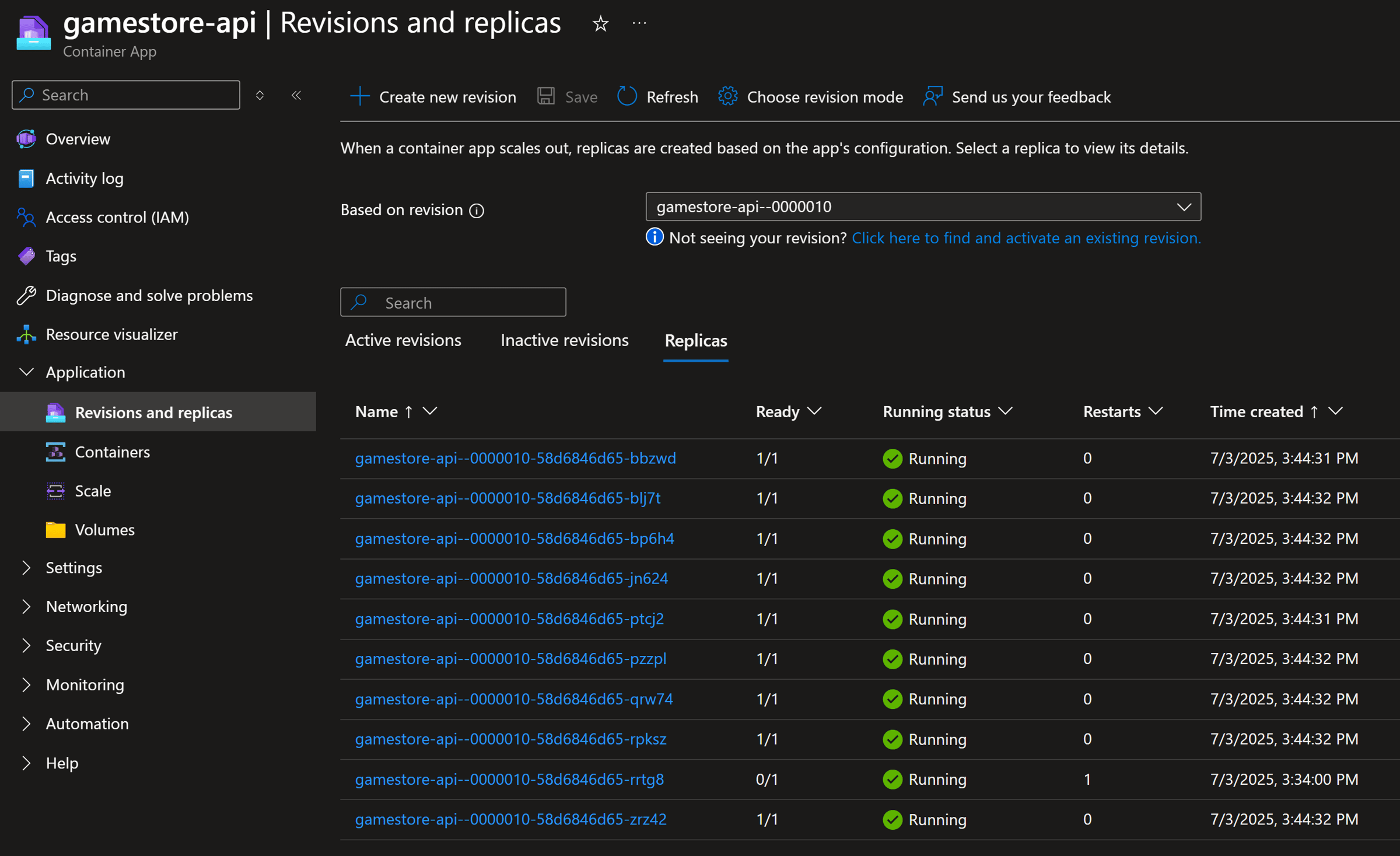

After those 45 seconds, we can confirm that, given our scaling rules, we have gone up from 1 to 10 replicas:

Now, let’s run our real test, same command as before:

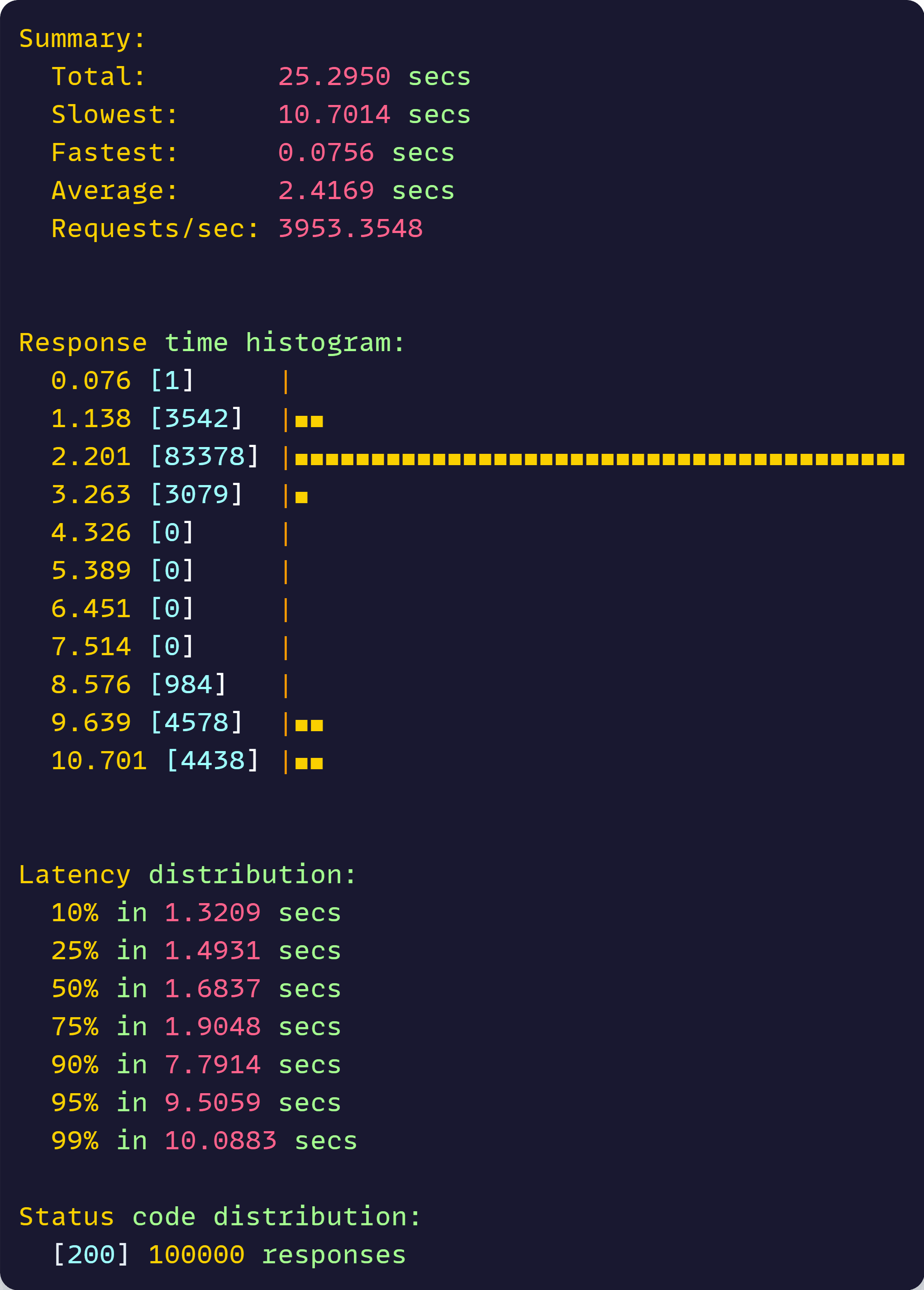

Here are our new results:

Just that final line, where we can see all 100,000 requests returned a 200 OK response, is an amazing achievement.

But let’s do a more detailed comparison.

A dramatic difference

Here’s how horizontal scaling via containers, Azure Container Apps and .NET Aspire, completely transformed our API’s performance:

| Metric | 1 Replica | 10 Replicas | Improvement |

|---|---|---|---|

| Test Duration | 101.15 seconds | 25.30 seconds | 4x faster completion |

| Success Rate | 66.6% | 100% | Zero failures |

| Throughput | 989 req/sec | 3,953 req/sec | 4x more requests handled |

| Average Response | 7.86 seconds | 2.42 seconds | 69% faster responses |

| Median Response | 8.44 seconds | 1.68 seconds | 80% improvement |

The amazing results speak for themselves :)

And for real users using our .NET API, this would mean that:

- With 1 replica, 1 in 3 users would have gotten errors or timeouts - a completely unacceptable user experience.

- With 10 replicas, every single user got a fast, successful response.

Mission Accomplished!

Wrapping Up

The numbers don’t lie. Horizontal scaling didn’t just improve performance—it transformed a failing system into a reliable one.

This is the reality of modern web applications. When traffic spikes hit, having adequate replicas means the difference between users getting served versus getting frustrated with error pages.

The difference between 66% success rate and 100% success rate isn’t just a number—it’s the difference between losing customers and keeping them.

Production-ready applications don’t happen by accident—they’re built with the right tools and patterns from the start.

And that’s all for today.

See you next Saturday.

P.S. Tomorrow is the last day of my summer sale—22% off everything. Perfect timing if you want to dive deeper into containers, .NET Aspire, or Azure deployment.

Whenever you’re ready, there are 3 ways I can help you:

-

.NET Backend Developer Bootcamp: A complete path from ASP.NET Core fundamentals to building, containerizing, and deploying production-ready, cloud-native apps on Azure.

-

Building Microservices With .NET: Transform the way you build .NET systems at scale.

-

Get the full source code: Download the working project from this article, grab exclusive course discounts, and join a private .NET community.